DeepFake Detection using Explainable AI

- The proliferation of AI-generated fake images, or DeepFakes, has become a serious issue as they can spread misinformation and abusive content rapidly through modern media platforms. To address this problem, a deepfake detection model with explainable AI was implemented.

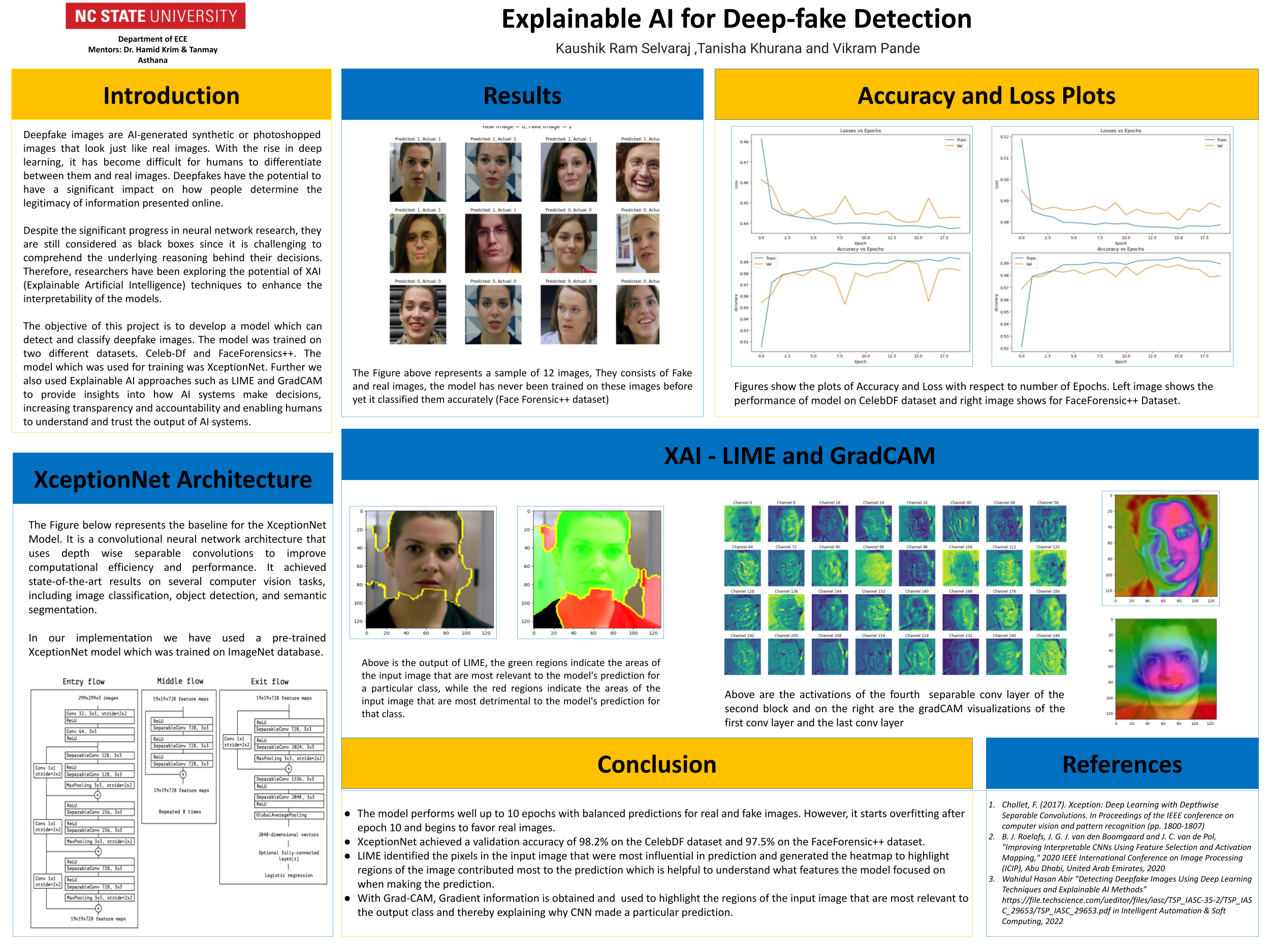

- Datasets used - FaceForensic++ and Celeb-DF

- Images were used instead of Videos from the dataset and a python script was written to extract images from the videos.

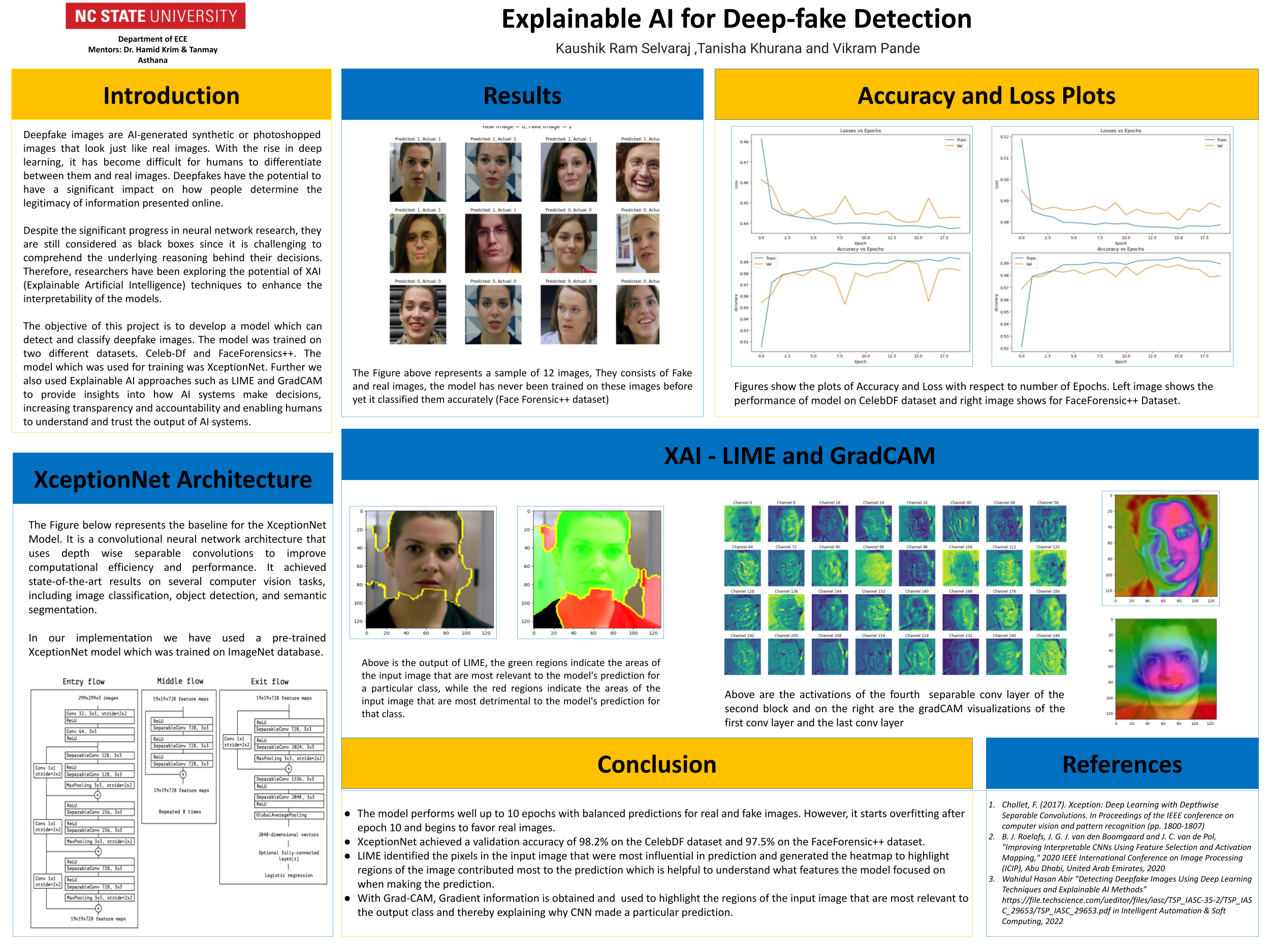

- The State-of-the-art XceptionNet with depthwise separable convolutions was used to detect the fake images.

- Incorporated explainable AI (XAI) techniques to gain insights into the interpretability of the model hence employed LIME and GradCam algorithms to visualize and analyze how the model interprets the results.